In recent years, videogame developers and computer scientists have been trying to devise techniques that can make gaming experiences increasingly immersive, engaging, and realistic. These include methods to automatically create videogame characters inspired by real people.

Most existing methods to create and customize videogame characters require players to adjust the features of their character’s face manually, in order to recreate their own face or the faces of other people. More recently, some developers have tried to develop methods that can automatically customize a character’s face by analyzing images of real people’s faces. However, these methods are not always effective and do not always reproduce the faces they analyze in realistic ways.

Researchers have recently created MeInGame, a deep learning technique that can automatically generate character faces by analyzing a single portrait of a person’s face.

They proposed an automatic character face creation method that predicts both facial shape and texture from a single portrait and can be integrated into most existing 3D games.

Some of the automatic character customization systems presented in previous works are based on computational techniques known as 3D morphable face models (3DMMs). While some of these methods have been found to reproduce a person’s facial features with good levels of accuracy, the way in which they represent geometrical properties and spatial relations (i.e., topology) often differs from the meshes utilized in most 3D videogames.

In order for 3DMMs to reproduce the texture of a person’s face reliably, they typically need to be trained on large datasets of images and on related texture data. Compiling these datasets can be fairly time-consuming. Moreover, these datasets do not always contain real images collected in the wild, which can prevent models trained on them from performing consistently well when presented with new data.

Given an input face photo, they first, reconstruct a 3D face based on a 3D morphable face model (3DMM) and convolutional neural networks (CNNs), then transfer the shape of the 3D face to the template mesh. The proposed network takes the face photo and the unwrapped coarse UV texture map as input then predicts lighting coefficients and refined texture maps.

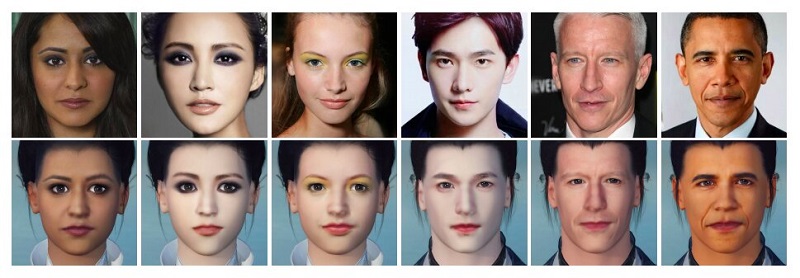

They evaluated their deep learning technique in a series of experiments, comparing the quality of the game characters it generated with that of character faces produced by other existing state-of-the-art methods for automatic character customization. Their method performed remarkably well, generating character faces that closely resembled those in input images.

The proposed method does not only produce detailed and vivid game characters similar to the input portrait, but it can also eliminate the influence of lighting and occlusions. Experiments show that this method outperforms state-of-the-art methods used in games.

In the future, the character face generation method devised by this team of researchers could be integrated within a number of 3D videogames, enabling the automatic creation of characters that closely resemble real people.