The generative artificial intelligence (AI) revolution is here. With the growing demand for generative AI use cases across verticals with diverse requirements and computational demands, there is a clear need for a refreshed computing architecture custom-designed for AI. It starts with a neural processing unit (NPU) designed from the ground-up for generative AI, while leveraging a heterogeneous mix of processors, such as the central

processing unit (CPU) and graphics processing unit (GPU). By using an appropriate processor in conjunction with an NPU, heterogeneous computing maximizes application performance, thermal efficiency and battery life to enable new and enhanced generative AI experiences.

Why is heterogenous computing important?

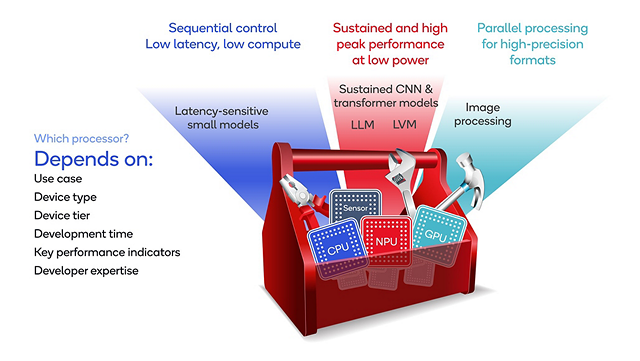

Because of the diverse requirements and computational demands of generative AI, different processors are needed. A heterogeneous computing architecture with processing diversity gives the opportunity to use each processor’s strengths, namely an AI-centric custom-designed NPU, along with the CPU and GPU, each excelling in different task domains. For example, the CPU for sequential control and immediacy, the GPU for streaming parallel data, and the NPU for core AI workloads with scalar, vector and tensor math.

Heterogeneous computing maximizes application performance, device thermal efficiency and battery life to maximize generative AI end-user experiences.

What is an NPU?

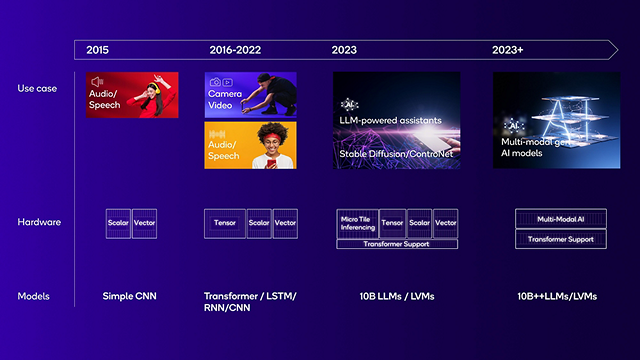

The NPU is built from the ground-up for accelerating AI inference at low power, and its architecture has evolved along with the development of new AI algorithms, models and use cases. AI workloads primarily consist of calculating neural network layers comprised of scalar, vector,and tensor math followed by a non-linear activation function. A superior NPU design makes the right design choices to handle these AI workloads and is tightly aligned with the direction of the AI industry.

Our leading NPU and heterogeneous computing solution

Qualcomm is enabling intelligent computing everywhere. Our industry-leading Qualcomm Hexagon NPU is designed for sustained, high-performance AI inference at low power. What differentiates our NPU is our system approach, custom design and fast innovation. By custom-designing the NPU and controlling the instruction set architecture (ISA), we can quickly evolve and extend the design to address bottlenecks and optimize performance.

The Hexagon NPU is a key processor in our best-in-class heterogeneous computing architecture, the Qualcomm AI Engine, which also includes the Qualcomm Adreno GPU, Qualcomm Kryo or Qualcomm Oryon CPU, Qualcomm Sensing Hub, and memory subsystem. These processors are engineered to work together and run AI applications quickly and efficiently on device.

Our industry-leading performance in AI benchmarks and real generative AI applications exemplifies this. Read the whitepaper for a deeper dive on our NPU, our other heterogeneous processors, and our industry-leading AI performance on Snapdragon 8 Gen 3 and Snapdragon X Elite.

Enabling developers to accelerate generative AI applications

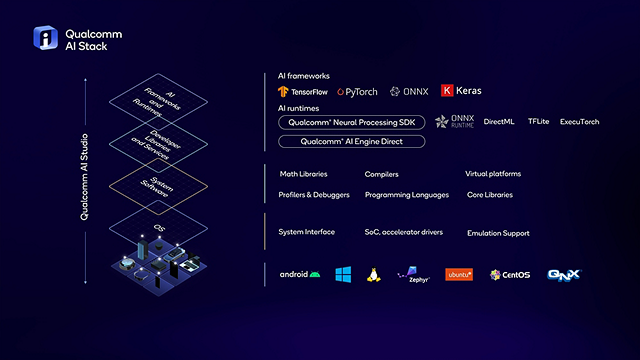

We enable developers by focusing on ease of development and deployment across the billions of devices worldwide powered by Qualcomm and Snapdragon platforms. Using the Qualcomm AI Stack, developers can create, optimize and deploy their AI applications on our hardware, writing once and deploying across different products and segments using our chipset solutions.

The combination of technology leadership, custom silicon designs, full-stack AI optimization and ecosystem enablement sets Qualcomm Technologies apart to drive the development and adoption of on-device generative AI. Qualcomm Technologies is enabling on-device generative AI at scale.

Director, Technical Marketing,

Qualcomm Technologies, Inc.