Courtesy: Nvidia

Physical AI Models- which power robots, autonomous vehicles, and other intelligent machines — must be safe, generalized for dynamic scenarios, and capable of perceiving, reasoning and operating in real time. Unlike large language models that can be trained on massive datasets from the internet, physical AI models must learn from data grounded in the real world.

However, collecting sufficient data that covers this wide variety of scenarios in the real world is incredibly difficult and, in some cases, dangerous. Physically based synthetic data generation offers a key way to address this gap.

NVIDIA recently released updates to NVIDIA Cosmos open-world foundation models (WFMs) to accelerate data generation for testing and validating physical AI models. Using NVIDIA Omniverse libraries and Cosmos, developers can generate physically based synthetic data at incredible scale.

Cosmos Predict 2.5 now unifies three separate models — Text2World, Image2World, and Video2World — into a single lightweight architecture that generates consistent, controllable multicamera video worlds from a single image, video, or prompt.

Cosmos Transfer 2.5 enables high-fidelity, spatially controlled world-to-world style transfer to amplify data variation. Developers can add new weather, lighting and terrain conditions to their simulated environments across multiple cameras. Cosmos Transfer 2.5 is 3.5x smaller than its predecessor, delivering faster performance with improved prompt alignment and physics accuracy.

These WFMs can be integrated into synthetic data pipelines running in the NVIDIA Isaac Sim open-source robotics simulation framework, built on the NVIDIA Omniverse platform, to generate photorealistic videos that reduce the simulation-to-real gap. Developers can reference a four-part pipeline for synthetic data generation:

- NVIDIA Omniverse NuRec neural reconstruction libraries for reconstructing a digital twin of a real-world environment in OpenUSD, starting with just a smartphone.

- SimReady assets to populate a digital twin with physically accurate 3D models.

- The MobilityGen workflow in Isaac Sim to generate synthetic data.

- NVIDIA Cosmos for augmenting generated data.

From Simulation to the Real World

Leading robotics and AI companies are already using these technologies to accelerate physical AI development.

Skild AI, which builds general-purpose robot brains, is using Cosmos Transfer to augment existing data with new variations for testing and validating robotics policies trained in NVIDIA Isaac Lab.

Skild AI uses Isaac Lab to create scalable simulation environments where its robots can train across embodiments and applications. By combining Isaac Lab robotics simulation capabilities with Cosmos’ synthetic data generation, Skild AI can train robot brains across diverse conditions without the time and cost constraints of real-world data collection.

Serve Robotics uses synthetic data generated from thousands of simulated scenarios in NVIDIA Isaac Sim. The synthetic data is then used in conjunction with real data to train physical AI models. The company has built one of the largest autonomous robot fleets operating in public spaces and has completed over 100,000 last-mile meal deliveries across urban areas. Serve’s robots collect 1 million miles of data monthly, including nearly 170 billion image-lidar samples, which are used in simulation to further improve robot models.

See How Developers Are Using Synthetic Data

Lightwheel, a simulation-first robotics solution provider, is helping companies bridge the simulation-to-real gap with SimReady assets and large-scale synthetic datasets. With high-quality synthetic data and simulation environments built on OpenUSD, Lightwheel’s approach helps ensure robots trained in simulation perform effectively in real-world scenarios, from factory floors to homes.

Data scientist and Omniverse community member Santiago Villa is using synthetic data with Omniverse libraries and Blender software to improve mining operations by identifying large boulders that halt operations.

Undetected boulders entering crushers can cause delays of seven minutes or more per incident, costing mines up to $650,000 annually in lost production. Using Omniverse to generate thousands of automatically annotated synthetic images across varied lighting and weather conditions dramatically reduces training costs while enabling mining companies to improve boulder detection systems and avoid equipment downtime.

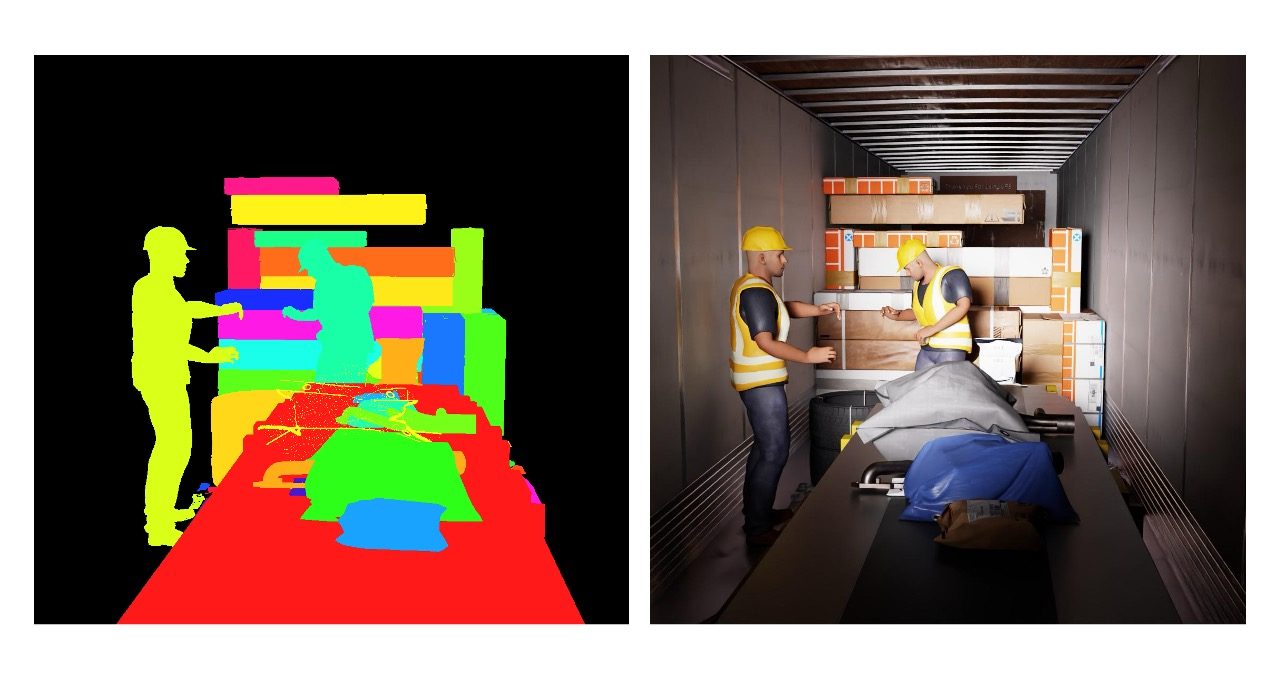

FS Studio partnered with a global logistics leader to improve AI-driven package detection by creating thousands of photorealistic package variations in different lighting conditions using Omniverse libraries like Replicator. The synthetic dataset dramatically improved object detection accuracy and reduced false positives, delivering measurable gains in throughput speed and system performance across the customer’s logistics network.

Robots for Humanity built a full simulation environment in Isaac Sim for an oil and gas client using Omniverse libraries to generate synthetic data, including depth, segmentation and RGB images, while collecting joint and motion data from the Unitree G1 robot through teleoperation.

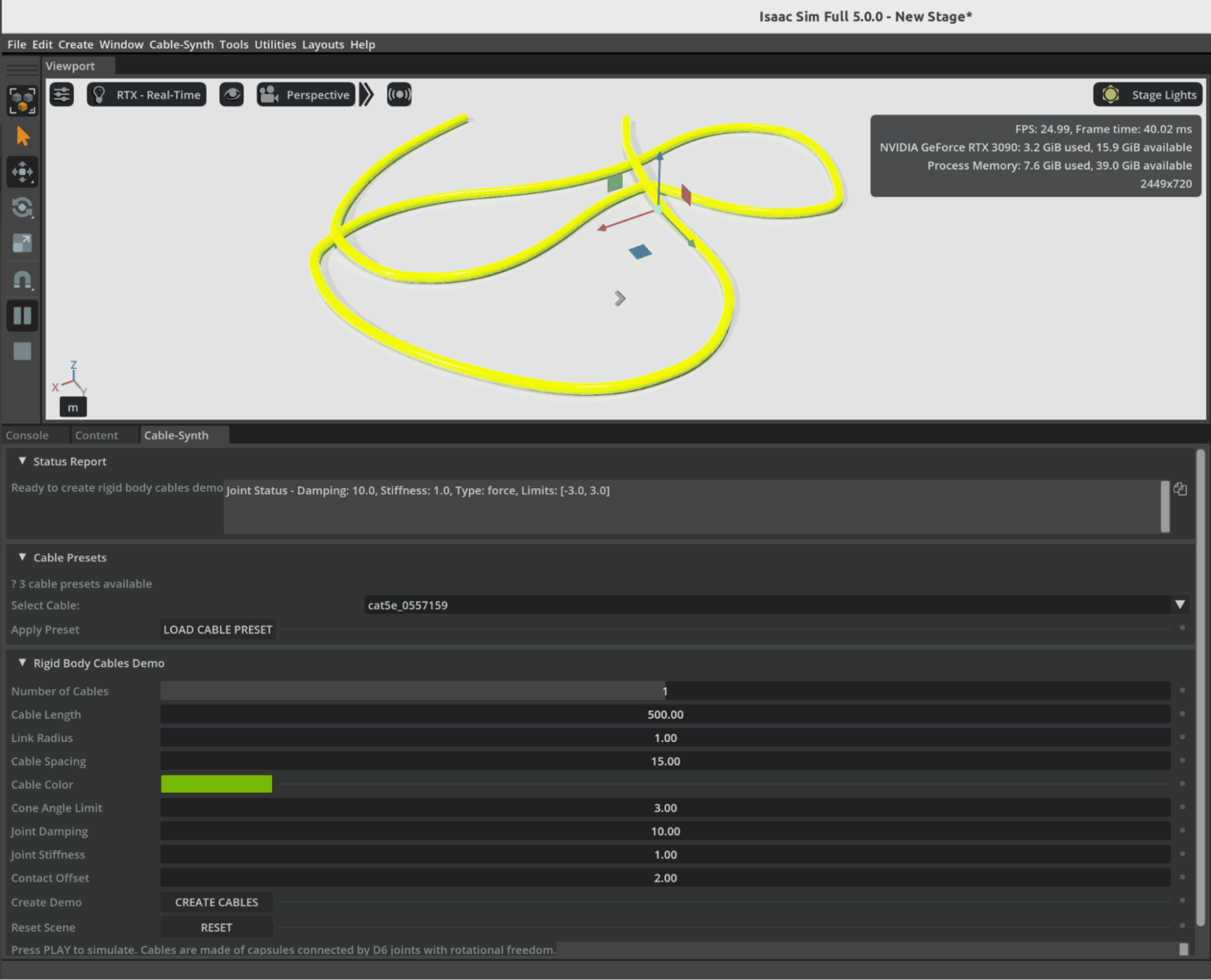

Omniverse Ambassador Scott Dempsey is developing a synthetic data generation synthesizer that builds various cables from real-world manufacturer specifications, using Isaac Sim to generate synthetic data augmented with Cosmos Transfer to create photorealistic training datasets for applications that detect and handle cables.

Conclusion

As physical AI systems continue to move from controlled labs into the complexity of the real world, the need for vast, diverse, and accurate training data has never been greater. Physically based synthetic worlds—driven by open-world foundation models and high-fidelity simulation platforms like Omniverse—offer a powerful solution to this challenge. They allow developers to safely explore edge cases, scale data generation to unprecedented levels, and accelerate the validation of robots and autonomous machines destined for dynamic, unpredictable environments.

The examples from industry leaders show that this shift is already well underway. Synthetic data is strengthening robotics policies, improving perception systems, and drastically reducing the gap between simulation and real-world performance. As tools like Cosmos, Isaac Sim, and OpenUSD-driven pipelines mature, the creation of rich virtual worlds will become as essential to physical AI development as datasets and GPUs have been for digital AI.

In many ways, we are witnessing the emergence of a new engineering paradigm—one where intelligent machines learn first in virtual environments grounded in real physics, and only then step confidently into the physical world. The Omniverse is not just a place to simulate; it is becoming the training ground for the next generation of autonomous systems.